Chips are great. Whether we’re talking about the fried potato variety or the silicon wafer kind in either Central Processing Unit (CPU) or Graphical Processing Unit (GPU) variants. But processing always has a shelf-life and we all know the wider effects of Moore’s Law these days as we miniaturized transistor micron size down to a point where we had to start clustering to get more power… and so on. But this is a brief history of chips as we used to know it… our next future in this space will of course be defined by photonics integrated circuits that make use of light with the ability to process at high speed with low latency and (crucially in this age of sustainability-focused computing) at low power.

Photonics is at the heart of the Innovative Optical and Wireless Network (IOWN) initiative and now we see NTT Corporation (NTT) and Red Hat, Inc., in collaboration with Fujitsu as well as capitalization enthusiasts Nvidia – will the companies now coming together with a jointly developed a solution to enhance and extend the potential for real-time artificial intelligence (AI) data analysis at the edge.

What is IOWN?

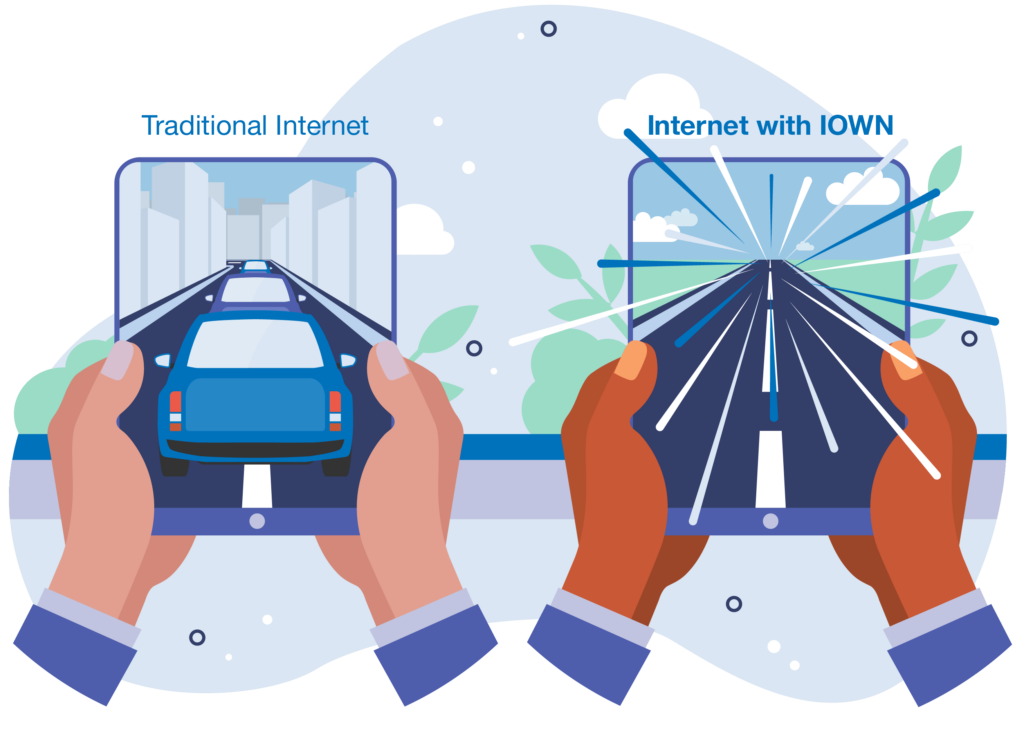

Innovative Optical and Wireless Network (IOWN) is an initiative for future communications infrastructure to create a smarter world by using cutting-edge technologies. IOWN is built around photonic technology for ultra-high capacity, ultra-low latency and ultra-low power consumption.

Today, most of our devices and technologies (phones, watches, gaming, sensors, PCs, servers, networks, etc) use electronics to process and transmit information. IOWN will use optical technologies to transform these electronic connections into photonic connections, increasing transmission speeds and improving responsiveness while consuming extremely low levels of power.

The IOWN involves devices, networks and information processing infrastructure built on optical and other technologies to deliver high-speed and high-capacity communications and computing resources. IOWN consists of three key areas of technology:

- The All Photonics Network (APN), which applies optical technology — APNs seek to overcome existing network limitations by converting all signals into optical signals, thereby creating a network with higher capacity, lower latency, and more reduced energy consumption than now.

- Digital Twin Computing (DTC) for advanced, real-time interaction between objects and people in cyberspace.

- The Cognitive Foundation (CF), which deploys various ICT resources efficiently, including the above.

Using technologies developed by the IOWN Global Forum and built on the foundation of Red Hat OpenShift for hybrid cloud application platform power powered by Kubernetes, this solution has received an IOWN Global Forum’s Proof of Concept (PoC) recognition for its real world viability and use cases.

Triage input at the edge

As AI, sensing technology and networking continue to accelerate, using AI analysis to assess and triage input at the network’s edge will be critical, especially as data sources expand almost daily. Using AI analysis on a large scale, however, can be slow and complex, and can be associated with higher maintenance costs and software upkeep to onboard new AI models and additional hardware. With edge computing capabilities emerging in more remote locations, AI analysis can be placed closer to the sensors, reducing latency and increasing bandwidth.

This solution consists of the IOWN All-Photonics Network (APN) and data pipeline acceleration technologies in IOWN Data-Centric Infrastructure (DCI). NTT’s accelerated data pipeline for AI adopts Remote Direct Memory Access (RDMA) over APN to efficiently collect and process large amounts of sensor data at the edge. Container orchestration technology from Red Hat OpenShift3 provides greater flexibility to operate workloads within the accelerated data pipeline across geographically distributed and remote datacenters. NTT and Red Hat have successfully demonstrated that this solution can effectively reduce power consumption while maintaining lower latency for real-time AI analysis at the edge.

“The NTT Group, in great collaboration with partners, is accelerating the development of IOWN to achieve a sustainable society. This IOWN PoC is an important step forward toward green computing for AI, which supports collective intelligence of AI. We are further improving IOWN’s power efficiency by applying Photonics-Electronics Convergence technologies to a computing infrastructure. We aim to embody the sustainable future of net zero emissions with IOWN,” said Katsuhiko Kawazoe, senior executive vice president of NTT and chairman of IOWN Global Forum, while speaking at Mobile World Congress 2024 in Barcelona.

From Yokosuka City to Musashino City

The proof of concept evaluated a real-time AI analysis platform5 with Yokosuka City as the sensor installation base and Musashino City as the remote datacenter, both connected via APN. As a result, even when a large number of cameras were accommodated, the latency required to aggregate sensor data for AI analysis was reduced by 60% compared to conventional AI inference workloads. Additionally, the IOWN PoC testing demonstrated that the power consumption required for AI analysis for each camera at the edge could be reduced 40% from conventional technology.

This real-time AI analysis platform allows the GPU to be scaled up to accommodate a larger number of cameras without the CPU becoming a bottleneck. According to a trial calculation, assuming that 1,000 cameras can be accommodated, it is expected that power consumption can be further reduced by 60%. The highlights of the proof of concept for this solution are as follows.

“Over the last few years, we’ve worked as part of IOWN Global Forum to set the stage for AI innovation powered by open source and deliver technologies that help us make smarter choices for the future. This is important and exciting work, and these results help prove that we can build AI-enabled solutions that are sustainable and innovative for businesses across the globe. With Red Hat OpenShift, we can help NTT provide large-scale AI data analysis in real-time and without limitations,” said Chris Wright, chief technology officer and senior vice president of Global Engineering at Red Hat and board director of IOWN Global Forum.

Features here include:

- Accelerated data pipeline for AI inference, provided by NTT, utilizing RDMA over APN to directly fetch large-scale sensor data from local sites to the memory in an accelerator in a remote datacenter, reducing the protocol-handling overheads in the conventional network. It then completes data processing of AI inference within the accelerator with less CPU-controlling overheads, improving the power efficiency in AI inference.

- Large-scale AI data analysis in real-time, powered by Red Hat OpenShift, can support Kubernetes operators to minimize the complexity of implementing hardware-based accelerators (GPUs, DPUs, etc.), enabling improved flexibility and easier deployment across disaggregated sites, including remote data centers.

- This PoC uses NVIDIA A100 Tensor Core GPUs and NVIDIA ConnectX-6 NICs for AI inference.

The collective hope here is that technology firms are contributing to the realization of a sustainable and smarter society by applying technologies for smarter disaggregated computing.