Using more than 1,000 enterprise cloud implementation reviews of SAP, UNIT4, Oracle HCM, Workday, UKG, ServiceNow and Cornerstone, Raven Intel debunks six common myths about consulting partners and digital transformation efforts.

Raven Intel is a peer review site for enterprise software consulting. Its mission is to help software customers choose the best systems integrator (SI) for their project. It has spoken with software customers from every region, industry and size and has amassed project reviews on 1,030 enterprise software implementations, particularly HCM (publicly available at RavenIntel.com). Raven has analysed the results and provided the following insights into what is happening in the complex world of enterprise software consulting that flip conventional wisdom on its head. Before we bust through the ‘myths’, let’s review 2020 as it is important to realise some of the trends that have appeared over the past 12 months.

2020 Year in Review

2020 was a challenging year for the world of enterprise software projects. While it accelerated many digital transformation efforts, particularly in industries like healthcare, retail, government and manufacturing, the challenge of delivering projects in a fully remote environment was something that customers, vendors and SIs needed to adapt to.

In 2020, these trends included:

Types of project work. Higher volume of Phase 2/optimisation projects (^25%), somewhat fewer Phase 0/1 full-suite implementations from previous years (2018-19) down 10%.

> Industry hotspots. Increase in projects in healthcare, manufacturing (both ^15% from previous years) and government ^4%.

> Scope changes. 48% changed the scope of their project while in-flight.

> Delivery. On-time/on-budget delivery increased -10% ^ in on-time delivery/8% ^ on-budget increase, likely the result of projects being smaller in nature. Delivery range was approximately 60% on-time/on-budget and 40% late and/or over-budget.

> Satisfaction. Overall project satisfaction was higher than in years past (by close to 1 point out of 10 – hovering in the 8s).

> Recurring theme on satisfied projects: FLEXIBILITY.

Customer quotes:

“Vendor/SI was flexible with changing needs.”

“Strong understanding of our broader challenges.”

“Willingness to adapt to our changes.”

Myth 1

The bigger the SI the better.

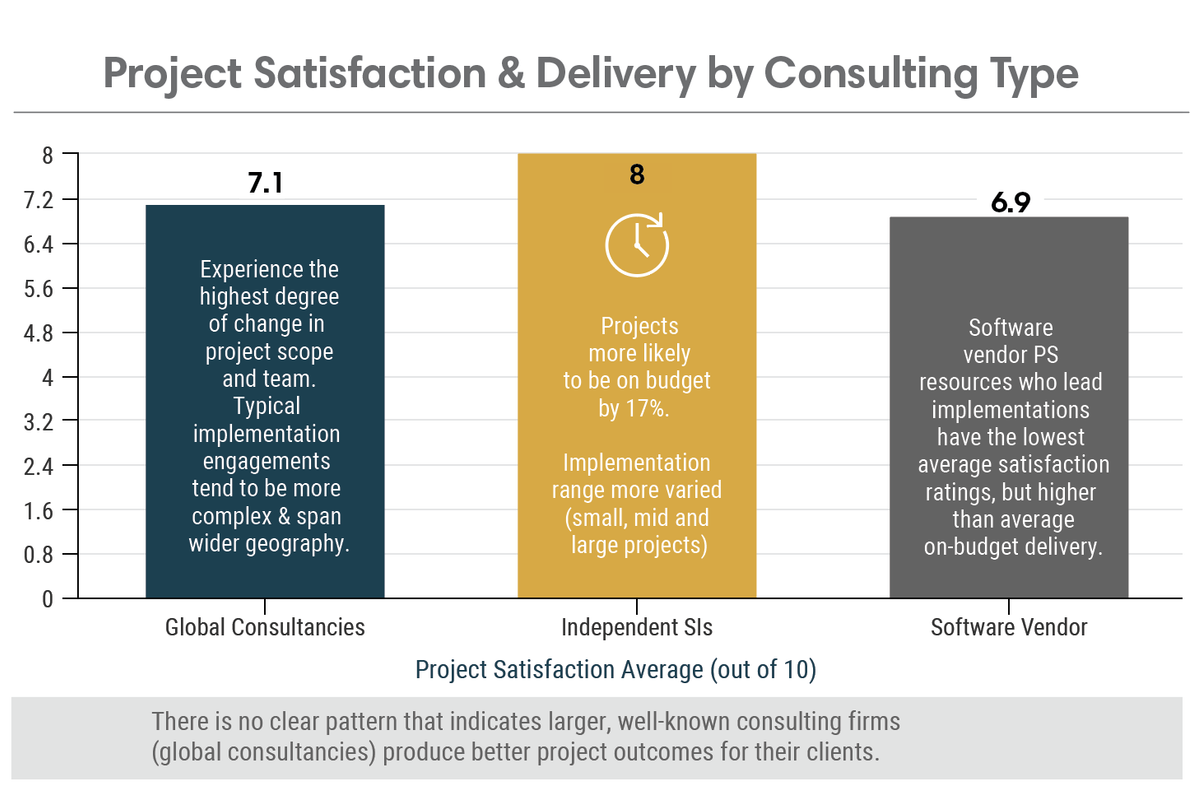

Fact: There is no correlation between consulting partner size and customer satisfaction.

There is no clear pattern that indicates larger, well-known consulting firms produce better project outcomes for their clients. Overall project satisfaction is highest for work done with independent firms (7.9) by close to a full point out of 10, and lowest for projects done by the software vendor themselves (not as common, but some customers use the actual software vendor themselves instead of an SI firm). Work done by global consultancies are rated average 7.1 out of 10. In addition, projects completed by independent firms are 17 percent more likely to be delivered on budget.

Takeaway: There are other more important criteria to consider when choosing an SI than size and brand-name. While they will boast a larger, global footprint and may have worked on broader management consulting projects for your organisation, going with a well-known/large partner for the software implementation provides no guarantee of success.

Myth 2

Price is everything.

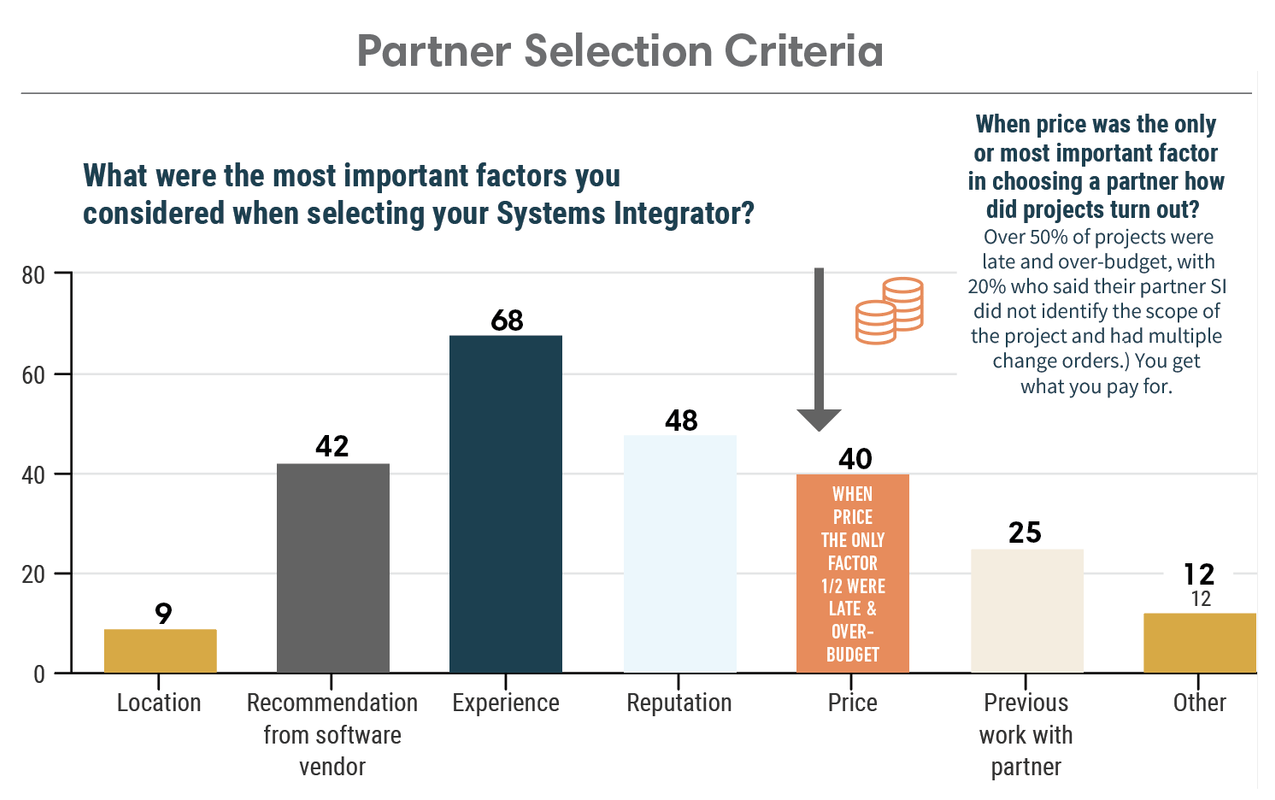

Fact: Customer satisfaction is highest for projects where price is a factor – but not the only one.

Projects where price is the only important criterion rate lower on basically every measure: 1) Project satisfaction 2) On-budget delivery 3) On-time delivery and 4) Project scoping quality.

Raven Intel asks reviewers what factors were important in choosing their implementation partner. The most common answers were:

> Experience – 68%

> Reputation – 48%

> Price – 40%

> Previous experience with consultant – 25%

> Vendor recommendation – 42%

> Location – 9%

When price was the only or most important factor in choosing a partner how did their projects turn out? Over half of projects were late and over-budget, with 20 percent who said their partner SI did not identify the scope of the project and had multiple change orders.

Takeaway: If you are choosing a partner based upon price alone, chances are you will end up paying more in the end (project scoping has a higher probability of being under-scoped, thus change orders will always cost more money). Customers with a more holistic view of partner selection criteria end up with better projects in the long run.

Myth 3

Project teams change – it’s not that big a deal.

Fact: Team change is one of the biggest downfalls of a project – particularly if the project manager changes.

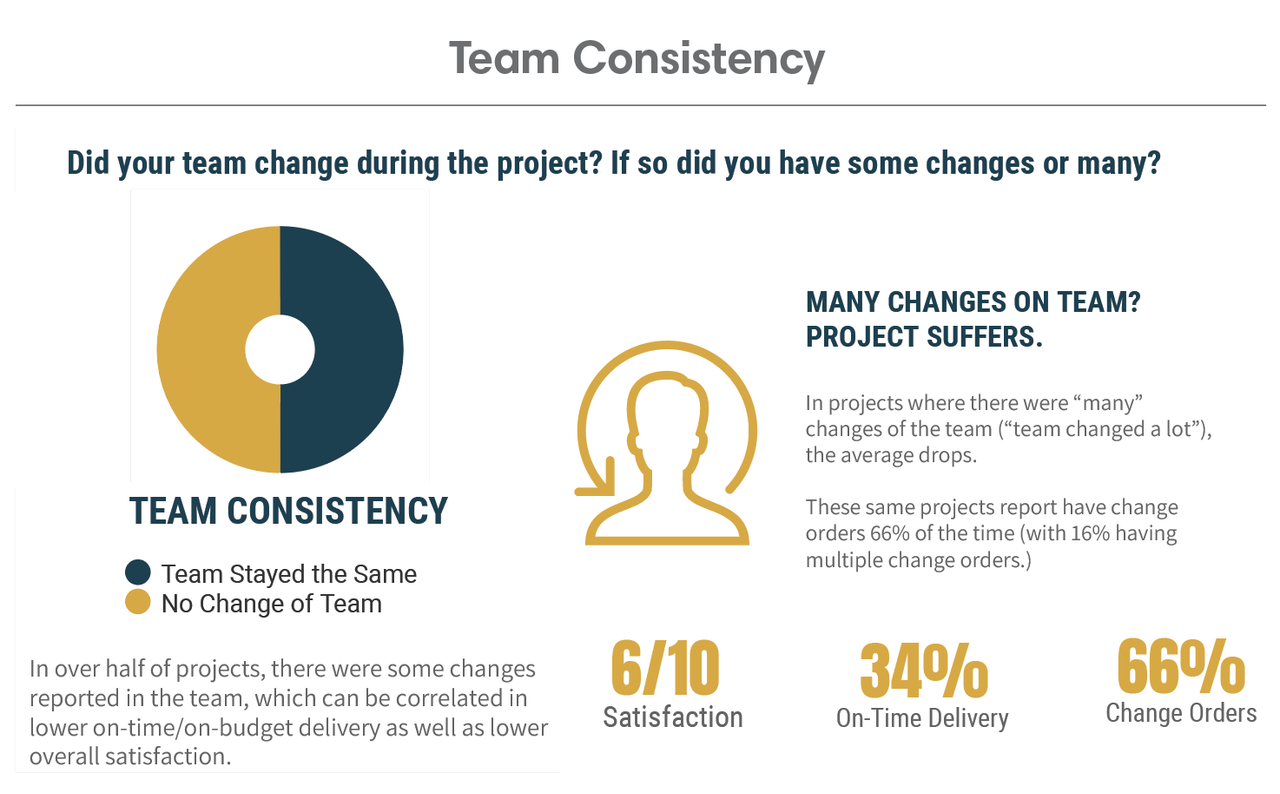

Raven Intel asks if the team that was initially assigned to the project stayed intact, or if there were ‘some’ or ‘many’ changes throughout the course of the project. In over half of projects, there were some changes reported in the team, which can be correlated in lower on-time/on-budget delivery as well as lower overall satisfaction (by 1 full point out of 10) as compared to projects with no team change. In projects where there were ‘many’ changes of the team (‘team changed a lot’), the average satisfaction drops to an average of 6/10 in project satisfaction, 34 percent on-time and 30 percent on-budget. These same projects report having change orders 66 percent of the time (with 16 percent having multiple change orders).

Takeaway: Keeping the team consistent throughout a project is important. Knowledge transfer to new players is difficult, and maintaining cohesion throughout the project can be directly correlated to success. Make sure the SI you hire doesn’t ‘bait-and-switch’ the team they introduced you to and assign different players to your project (or change players mid-project.)

Myth 4

Change orders are no big deal.

Fact: Change orders are a big contributor to dissatisfaction and project failure

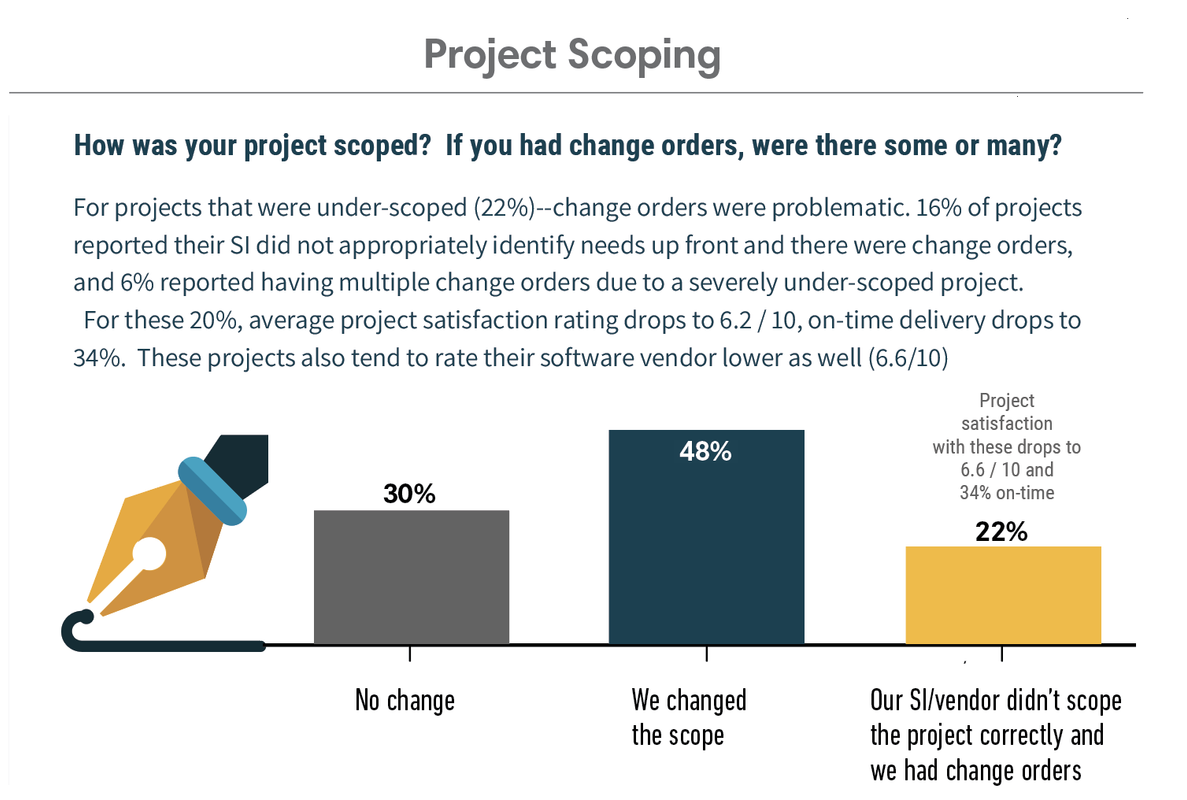

30 percent of projects reported no change in scope, while 48 percent reported changes due to their organisation changing the scope of the project. For the remainder – 22 percent – of change orders were problematic. 16 percent of projects reported their SI did not appropriately identify needs up front and there were change orders, and six percent reported having multiple change orders due to a severely under-scoped project.

For these 22 percent, average project satisfaction rating drops to 6.2/10, on-time delivery drops to 34 percent. These projects also tend to rate their software vendor lower as well (6.6/10)

Takeaway: Read the ‘statement of work’ provided by your SI and have your whole team read it as well. Ask a lot of questions. This sounds like a pretty basic concept but in some cases we’ve heard that the software salesperson has ‘sold’ the consulting engagement along with software agreement as a rapid deployment and set the wrong expectations about project scope and delivery. This never ends well.

Myth 5

You can judge a project as a success or failure clearly.

Fact: It depends on where you sit in the organisation

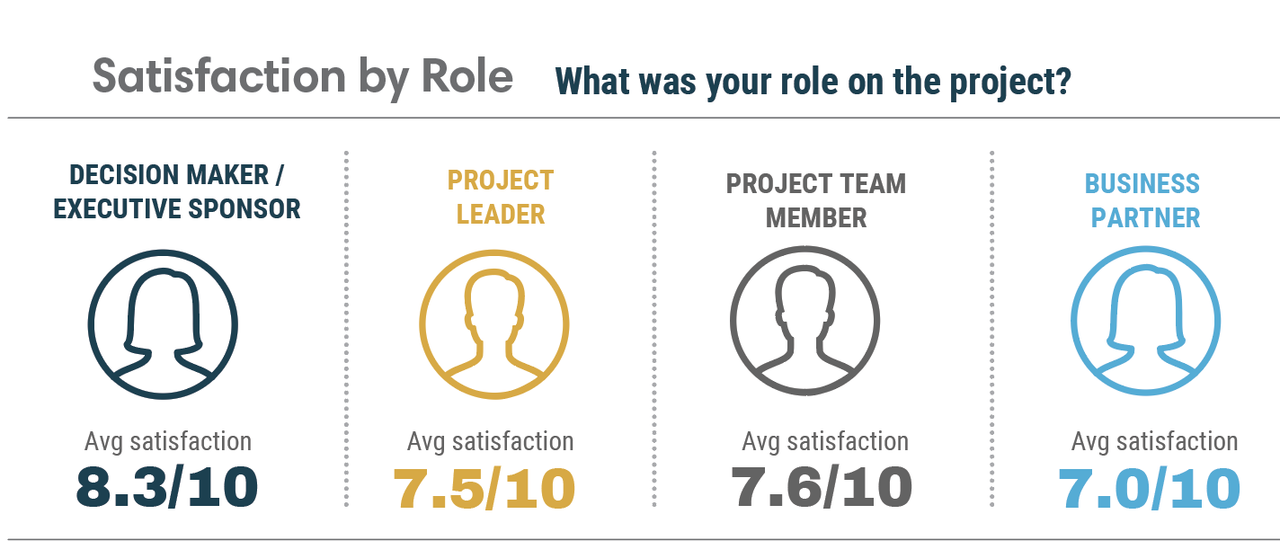

Reviewers who classify their role as the ‘decision maker’ typically rate their satisfaction with projects higher (8.3/10) than those who classify their role as a ‘project manager’ or ‘project team member’ (7.5/10). On the other end of the spectrum are those who say their role was ‘other,’ such as subject matter expert/business lead who on average rate projects the lowest (7.0/10).

Decision makers may rate the partner more highly because the project team is looking to present their progress in the best possible light. An executive sponsor or decision maker who isn’t involved in the project on a daily basis may not appreciate the difficulty that the project team members faced to get the project up and running. The perspectives of all these reviewers are important to get a full 360-degree view of the implementation partner.

Takeaway:When choosing your consulting firm, be sure to take into account multiple points of view within the organisation – not just the executive sponsor who typically isn’t as aware of the day-to-day mechanics of a project.

Myth 6

Integrations are hard.

Fact: Integrations are harder than you think.

Integrations are one of the most commonly referenced problems in the ‘lessons learned’ area of Raven’s reviews. Often the ‘ease’ of an integration to another system is oversold during the sales process, and customers end up frustrated with the additional resources, time and cost involved with getting systems to talk to one another. One recent reviewer commented: “Lining up a technical resource on the vendor side and keeping momentum with the integration development was challenging. It also generated unexpected vendor costs.” We’ve heard this time and time again.

Takeaway: Make sure you understand how exactly an integration will work – even the ones that are labelled as standard or pre-built. Your technical team should check the fields that will be mapped and understand the mechanics of how the data transfers actually work before you start the project. Rule of thumb is that you should double the cost and effort from the initial quote to avoid surprises down the road.

Final Thoughts

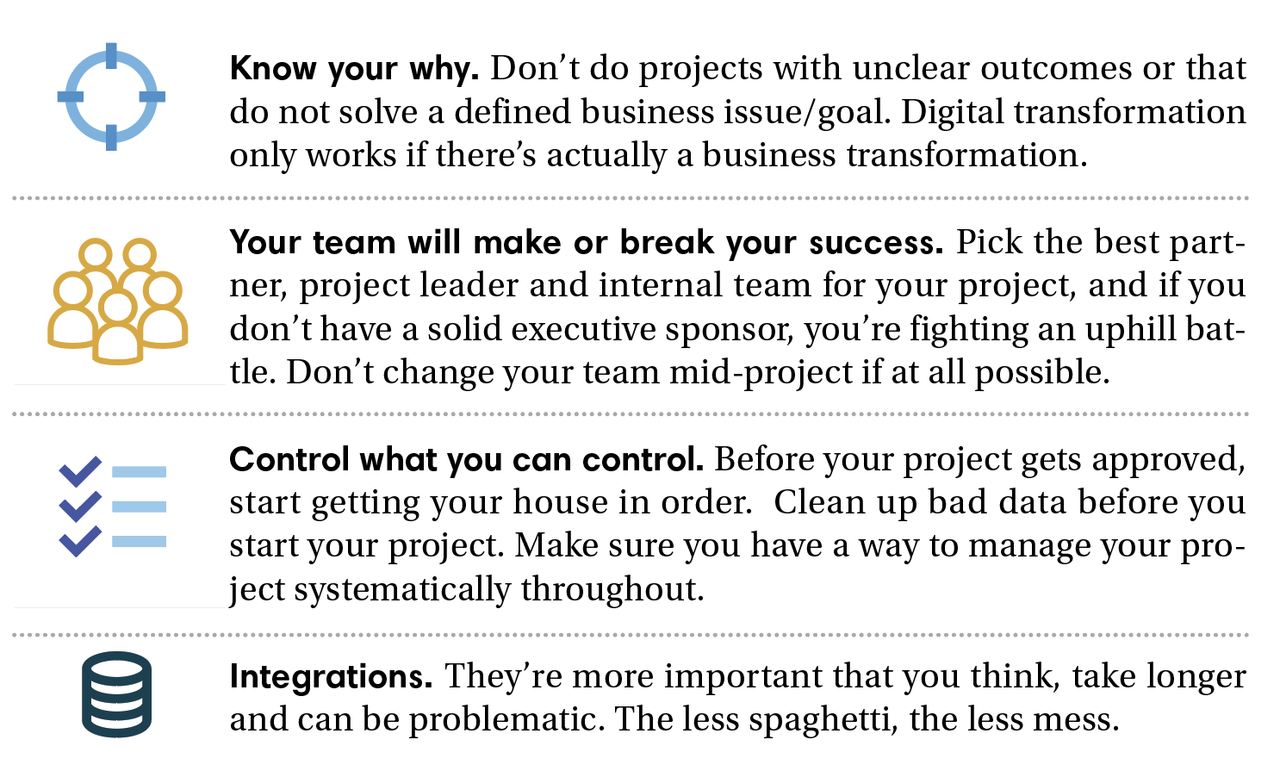

4 Takeaways for Successful Projects

There are four main themes that Raven Intel has heard from customers based upon the lessons learned area of our reviews for consideration when embarking on any project – from a full digital transformation effort, to a phase 2 add-on module rollout. They are:

Know your why. Don’t do projects with unclear outcomes or that do not solve a defined business issue/goal. Digital transformation only works if there’s actually a business transformation.

Your team will make or break your success. Pick the best partner, project leader and internal team for your project, and if you don’t have a solid executive sponsor, you’re fighting an uphill battle. Don’t change your team mid-project if at all possible.

Control what you can control. Before your project gets approved, start getting your house in order. Clean up bad data before you start your project. Make sure you have a way to manage your project systematically throughout.

Integrations. They’re more important that you think, take longer and can be problematic. The less spaghetti, the less mess.