Artificial intelligence (AI) is a core component of many companies’ digital transformation strategies today. However, a lack of trust in AI is holding some organisations back from moving forward. To overcome their underlying fears we need stronger performance monitoring mechanisms that can be relied upon to detect, or better yet, predict failures before they occur.

What ‘good’ looks like in terms of an AI agent’s performance will depend, to a certain extent, on the nature of the agent and how it is being used. AI agents are generally expected to make better, faster and higher quality decisions, but they have operational boundaries outside of which their performance will start to fall. It’s important for AI operators to detect when an AI agent has shifted past their optimal performance conditions.

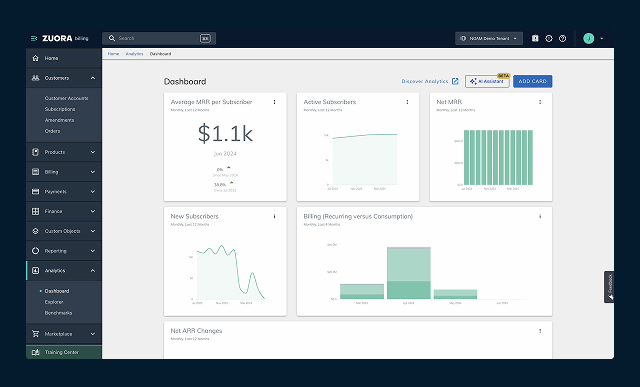

How can they do this when the performance of AI agents can vary significantly according to their training and operating conditions? A diverse set of metrics can be used to monitor an AI agent against a set of performance targets or thresholds. AI operators then need to be ready to take corrective action as performance begins to shift or is not operating consistently. This could trigger the involvement of a human-based exception routine or re-training.

Addressing the challenges

A current challenge with AI is that it’s being developed in an iterative fashion, making it more difficult to develop a full set of success criteria. Problems can arise when an AI agent is given a loosely defined objective or when training starts before a complete objective is in place. There can be further challenges if performance data is not available to measure the full spectrum of an agent’s behavior.

To address these challenges, companies need to develop a detailed problem statement (a framing of the problem that will be solved using AI) with specific objectives. These objectives should then be mapped to a robust set of qualitative and quantitative performance metrics that measure the behavior of the AI agent. And don’t forget the power of feedback; you need to tell the AI system its ‘score’ so it knows if it’s getting closer or further away from its objectives.

Using healthcare as an example, AI agents will play an increasingly important role in the diagnosis and prevention of disease. A framework for measuring the performance of AI agents needs to be sensitive to the ‘life or death’ implications of failed predictions, which means that failures are not created equally. A patient could be more accepting of a positive cancer diagnosis when they don’t have cancer, than a negative cancer diagnosis when they do have cancer.

Autonomous vehicles (AVs) serve as another example. Although studies have demonstrated that AVs have greater safety records than human drivers, many individuals would still choose a human driver over an AV. Why? Because they don’t understand the limitations of AVs, and ultimately, its failure conditions. As they can’t predict when it will fail, they can’t predict when it can be trusted.

Successful digital transformation in any business doesn’t just happen. It is the outcome of careful planning and design. The same principle applies to AI. Companies will only get “good” AI agents if they put upfront effort into setting the right objectives and performance metrics.

Taking a close look at performance will go a long way to address the lack of trust that’s the leading barrier to the adoption of AI. For AI to reach its full potential, we need a more standardised approach to measure performance and a better definition of what ‘good’ looks like.

Cathy Cobey is EY’s global trusted AI advisory leader.

The views reflected in this article are the views of the author and do not necessarily reflect the views of the global EY organisation or its member firms.