Successful use of AI depends on enterprises designing trust into their systems sooner rather than later.

There is a long list of business use cases for AI that extends from customer service and manufacturing to building management, cybersecurity, and human resources. The effectiveness of AI in any of these scenarios depends on one very important factor: trust.

Artificial intelligence (AI) has the potential to transform a host of industries while changing our world for the better. For example, within agriculture AI could enable us to produce greater quantities of food using fewer resources. In healthcare AI tools could significantly improve the detection and treatment of disease. For the transport sector, AI is also a crucial component of self-driving cars, and can help with the planning, design and control of road networks.

Enterprises today are investing thousands – and in some cases millions – of dollars in developing sophisticated AI systems. Yet it only takes one mistake – or the perception that a mistake has occurred – for a user to stop trusting AI. Because of these trust issues many enterprises are either holding back from taking full advantage of the opportunities that the technology presents or being cautiously selective about where to apply it. A recent European study, conducted jointly by EY and Microsoft found that while 71 percent of respondents considered AI to be an important topic for executive management just four percent were using it across multiple processes, including the performance of advanced tasks.

Trust is the foundation on which organisations can build stakeholder confidence and active participation in their AI systems. So, the big challenge for enterprise leaders is this: how can they earn and sustain user trust in AI over the long-term?

Building trust in AI from the outset

The best way for leaders to address this challenge is by infusing ‘trust by design’ into their AI systems from the outset. That means that from the moment they first conceive of using an AI system within their business, they think about the expectations of stakeholders and how AI will enhance the products and services. They should also consider the governance and control structures they will have in place for the AI system, and which new mechanisms will be needed to respond to the unique risks posed by AI. These risks span four key areas:

• Algorithmic risks – where the algorithms used in the system have an inadequate model and features, deliver biased outcomes or are so-called “black box” algorithms that do not have transparent logic

• Data risks – whether the system uses low quality or biased data, or insufficiently representative training data

• Design risks – where the system does not align with stakeholder expectations, has unknown limitations and boundary conditions, or is able to subvert human agency

Performance risk – where the system is asked to perform tasks that are beyond its technical capabilities, has performance that drifts over time, or is subject to adversarial attacks

Not only does AI have unique risks, it is also developing extremely rapidly. Enterprises cannot afford to rely solely on traditional risk management techniques when managing AI. They also need to look at:

• Data-based risk analytics

• Adaptive learning in response to user feedback and model validation

• Continuous monitoring and supervised response mechanisms

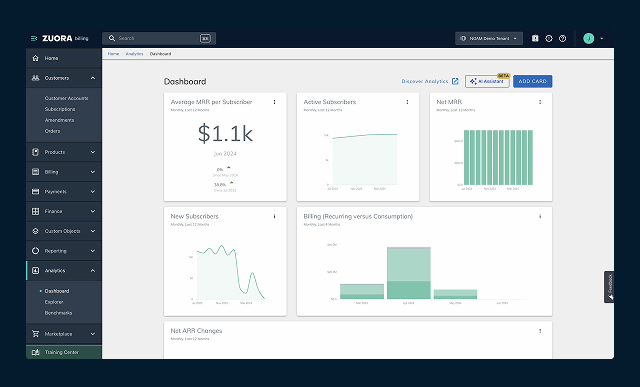

• Risk metrics and dashboards that are infused with data science

• Close collaboration between AI developers and risk professionals.

Essential that all enterprises make trust a core pillar of their strategy when looking to implement AI at scale

Measuring the risks

If enterprises are to use AI to its full potential then they need to infuse trust and employ the ability to predict and measure conditions that amplify risks and undermine the trust aspect. This is a complex undertaking since these conditions span a wide spectrum of factors, from technical design considerations to stakeholder impacts, and the effectiveness and maturity of controls.

EY teams want to help enterprises navigate the complexity that is associated with identifying and reducing the risks posed by the use of AI at scale. With this in mind, the EY Trusted AI Platform was developed, with the aim of resolving the issue of trust in technology by helping to enable enterprises to quantify the impact and trustworthiness of AI systems.

The EY Trusted AI Platform, which is enabled by cloud computing platform Microsoft Azure, offers enterprises an integrated approach for evaluating, monitoring and quantifying the impact and trustworthiness of their AI systems. The platform draws on advanced analytical models to evaluate the technical design of an AI system. It measures risk drivers that include the system’s objective, underlying technologies, its technical operating environment and the level of autonomy it has in comparison with human oversight.

After that, the platform produces a technical score that factors in any unintended consequences of system usage, such as social and ethical implications. Furthermore, an evaluation of governance and control maturity is undertaken to reduce residual risk. The risk scoring model is based on the EY Trusted AI framework, a conceptual framework that helps enterprises to understand and plan for how risks that emerge from new technologies such as AI could undermine their products, brands, relationships and reputations.

Many enterprises are holding back from taking full advantage of AI opportunities, or cautiously selective for application

Strategy for AI success

Ultimately, there is no such thing as a one-size-fits-all strategy for enterprises when they are looking to preserve trust and manage the risks posed by AI systems. Each strategy will vary according to the expectations and needs of stakeholders, the unique technical specifications of systems, the competitive landscape of each sector and the legal and regulatory environment being operated in.

Nevertheless, it is essential that all enterprises make trust a core pillar of their strategy when they are looking to implement AI at scale. Once AI has been implemented, continuous monitoring mechanisms to confirm that all systems are operating as intended should be put in place. If an AI system is to be trusted by an enterprise’s stakeholders – its customers, employees, regulators and suppliers – it should not only demonstrate ethical and social responsibility, it must also perform as expected. It is imperative that organisations embed trust in their AI systems from the minute they start to think about them and not just treat it as a consideration for later down the road.

Cathy Cobey is EY’s global trusted AI advisory leader.

The views reflected in this article are the views of the author and do not necessarily reflect the views of the global EY organisation or its member firms.