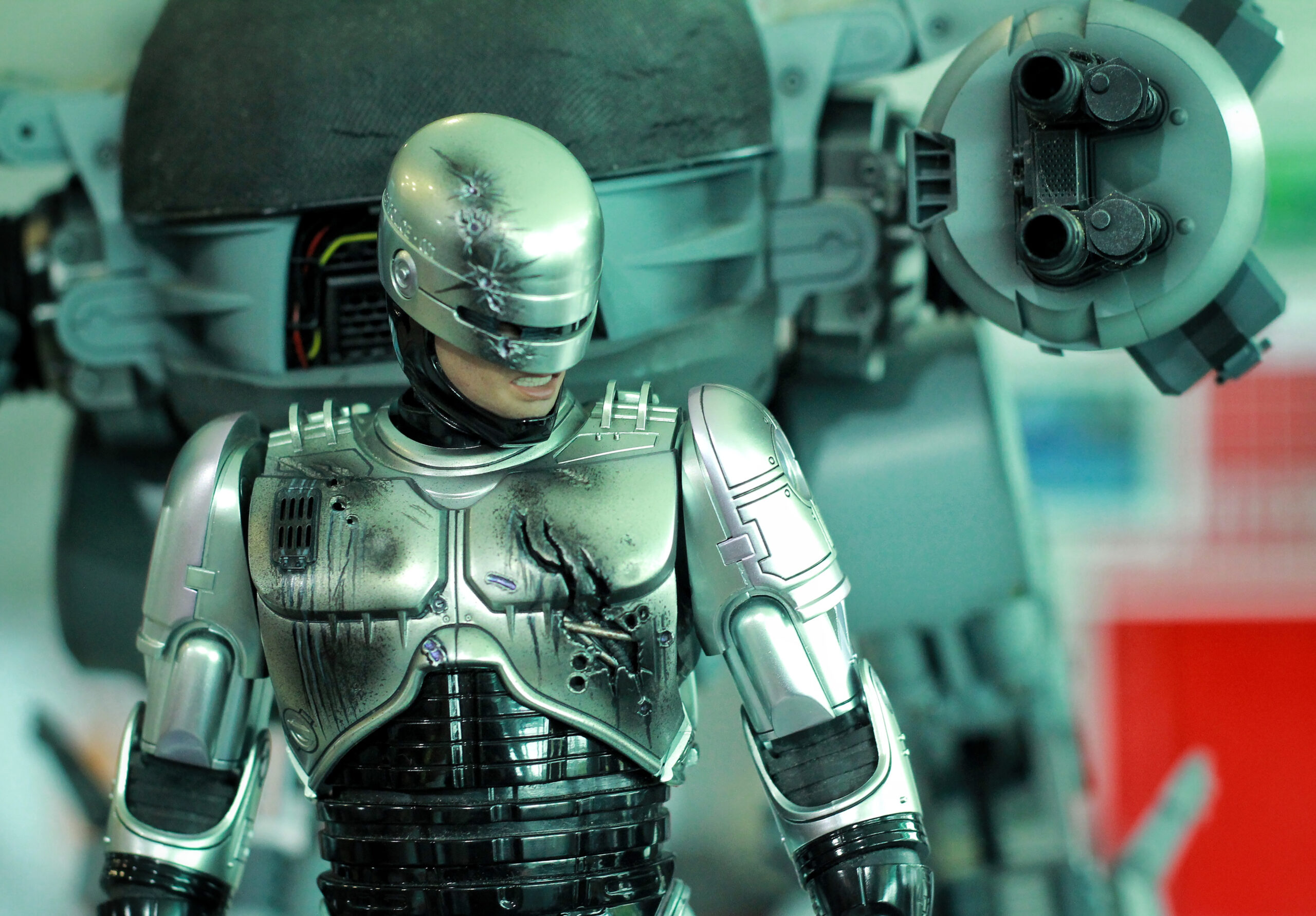

If you’re of a certain age, you probably recall sitting wide-eyed in 1987 as you watched the first Robocop movie. The storyline is designed to reel in teenage fantasists; when career cop Murphy is shot pursuing criminals, his corpse becomes the donor for the Omni Consumer Products (OCP) crime-fighting robot.

RoboCop is programmed with four prime directives – to serve the public trust, to protect the innocent, and to uphold the law. Directive 4 remained classified.

Of its time, RoboCop served up a moral dilemma, and marked out a social concern for future generations that would be repeated with increasingly better special effects in human-AI hybrid films like I, Robot, Ex Machina, and Her.

Wind forward 35 years from Robocop’s original screening and we now have practical AI. What that means is we face those moral dilemmas first posed in our teenage years.

Sh1t has gotten real

Exactly a decade after RoboCop, I was on my first SAP project. I set my sights high and had managed to bag a role designing the sales and delivery functionality for a toilet roll manufacturer. Classy, I know.

It all went well in testing. Until go-live, that is, when there were 20 trucks parked outside the warehouse waiting for orders to be picked and shipping notifications to be printed. I’d single-handedly stopped the business from supplying the UK’s supermarkets with their just-in-time orders of loo roll. As it unfolded in front of me, I was already reading my ERP horror story in imaginary newspaper headlines, before realizing that those newspapers might have to be put to better use very shortly.

The root cause of the problem wasn’t my SAP configuration or process design. It was master data, the base master data that makes its way into business transactions and causes wrinkles in business processes. Those wrinkles become waves, and waves become tsunamis. Before you know it, you have a line of countrless trucks and their angry drivers shouting at you because they’re over their nine-hour tacho limit and want to get home for the weekend.

But that bad data in transactions becomes bad data in reports, and also within clever analytics dashboards. Intelligence becomes infected, and human decision making gets tainted.

For decades, ERP consultants have harped on about the importance of data quality. But businesses who run ERP rarely take master data quality seriously. Data cleansing is seen as an implementation activity, not as a housekeeping ritual, nor a philosophy.

Bad data is tolerable in rogue transactions and can be explained away in erroneous reports.

But what if bad data makes its way into algorithms, into automation, into – dare I say it – robotics?

What if decisions are made automatically based on bad data?

What if the robot now makes tainted decisions?

High automation is good, right? RPA after all can save time, reduce effort, allowing us to redeploy scarce human capital on better activity, and improve customer experience.

There is a problem, though.

“You call this a glitch?”

High automation based on bad data is like RoboCop on drugs. Anything could happen.

Directive 4 stated that an OCP product shall not act against OCP’s best interests. This was designed to prevent RoboCop arresting senior OCP officials, stopping the fearsome robot’s firepower being turned on its creators.

Unfortunately, your business doesn’t have a Directive 4. Your RPA, AI, machine learning and algorithms will take your data – good, bad and ugly – and simply use it as a basis for decision making.

Which is why you should really start treating data as an asset on your balance sheet, and not as an overhead on your P&L.

“Your move, creep.”

Stuart Browne is the founder and managing director of leading UK independent ERP consultancy Resulting IT.