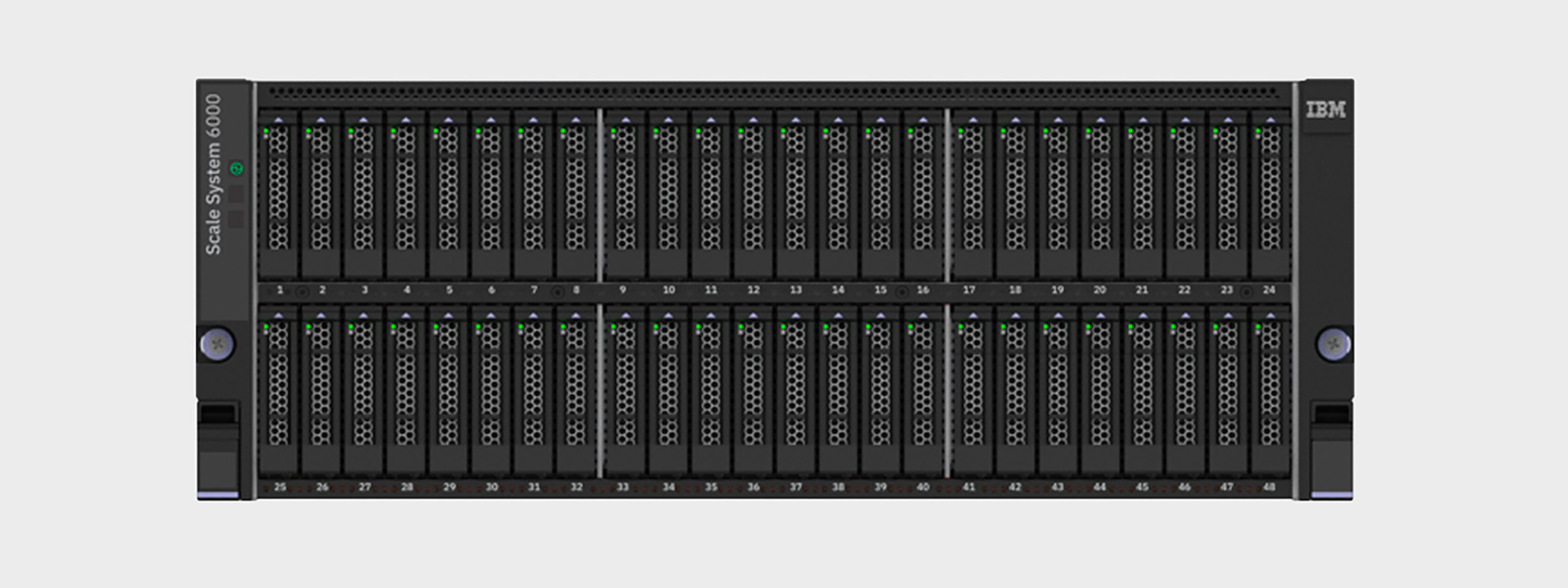

IBM has introduced its new cloud-scale global data platform, IBM Storage Scale System 6000, to meet today’s data-intensive and AI workload demands.

This cloud data platform is the company’s latest offering in the IBM Storage for Data and AI portfolio and builds on IBM’s leadership position with a high-performance parallel file system designed for data-intensive use cases. The platform provides up to 7M IOPs and up to 256GB/s throughput for read-only workloads per system in a 4U footprint.

The IBM Storage Scale System 6000 is optimized for storing semi-structured and unstructured data including video, imagery, text and instrumentation data that is generated daily and accelerates an organization’s digital footprint across hybrid environments.

With the IBM Storage Scale System, clients can expect greater data efficiencies and economies of scale with the addition of IBM Flashcore Modules (FCM), accelerate the adoption and operationalization of AI workloads with IBM watsonx and gain faster access to data with over 2.5x the GB/s throughput and 2x IOPs performance of market-leading competitors.

The University of Queensland is currently leveraging IBM Storage Scale global data platform and IBM Storage Scale System and has accelerated a variety of workloads which has provided them with faster access to data and improved efficiency.

Jake Carroll, chief technology officer of research computing centre, The University of Queensland, Australia, said: “With our current Storage Scale Systems 3500, we are helping decrease time to discovery and increase research productivity for a growing variety of scientific disciplines. For AI research involving medical image analysis, we have decreased latency of access by as much as 60 percent compared to our previous storage infrastructure. For genomics and complex fluid dynamics workloads, we have increased throughput by as much as 70 percent.”

Carroll added: “IBM’s Storage Scale System 6000 should be a gamechanger for us. With the specs that I’ve seen, by doubling the performance and increasing the efficiency, we would be able to ask our scientific research questions with higher throughput, but with a lower TCO and lower power consumption per IOP, in the process.”

Denis Kennelly, general manager, IBM Storage, said: “The potential of today’s new era of AI can only be fully realized, in my opinion, if organizations have a strategy to unify data from multiple sources in near real-time without creating numerous copies of data and going through constant iterations of data ingest.

“IBM Storage Scale System 6000 gives clients the ability to do just that – bring together data from core, edge and cloud into a single platform with optimized performance for GPU workloads.”